INTRODUCTION

For Lab 10, our class was tasked with grid localization using a Bayes filter. This filter takes in sensor measurements, control inputs, and a "belief" in order to determine position on a pre-determined map. This "belief" can be thought of as the robot's interpretation of where it might be on the map. By taking advantage of this belief, statistics, and the Bayesian inference, we can determine with relatively high precision where the robot lies on the map.

THE ALGORITHM

inputs

Above is the algorithm used to implement Bayes filter (taken from Professor Kirsten Peterson's lecture 19 slides). We start with a belief matrix(the size of every possible state our robot could be in) that describes the probability our robot is in that state. We also take in control inputs (delta_rot1, delta_trans, and delta_rot2) in the form of our input ut. The final input zt represents our sensor readings in the form of an 18 element list of distances taken at specified angles.

prediction

At the prediction step in the algorithm, we estimate the belief in a specific state by multiplying the probability we were in our previous state with the probablity we ended up in our new state given our control inputs. We sum this probability across every state to predict our new belief, bel_bar. This is all to say that we use a motion model to predict how likely our robot ended up where it did knowing how far it tried to move. This step generally increases uncertainty of our position because our robot performed an action and changed its environment. We can only estimate this change according to our model (in this case a Gaussian curve). We can then take this estimate into the next step of the Bayes filter, the update step.

update

The update step is where the reduction of uncertainty happens. We account for sensor readings in order to pinpoint our position. The belief of the current pose becomes our previous estimate, bel bar, multiplied by the probability that we got our sensor readings in our current state. The η term is simply there to normalize the belief matrix so that it sums to one, as any probability distribution should.

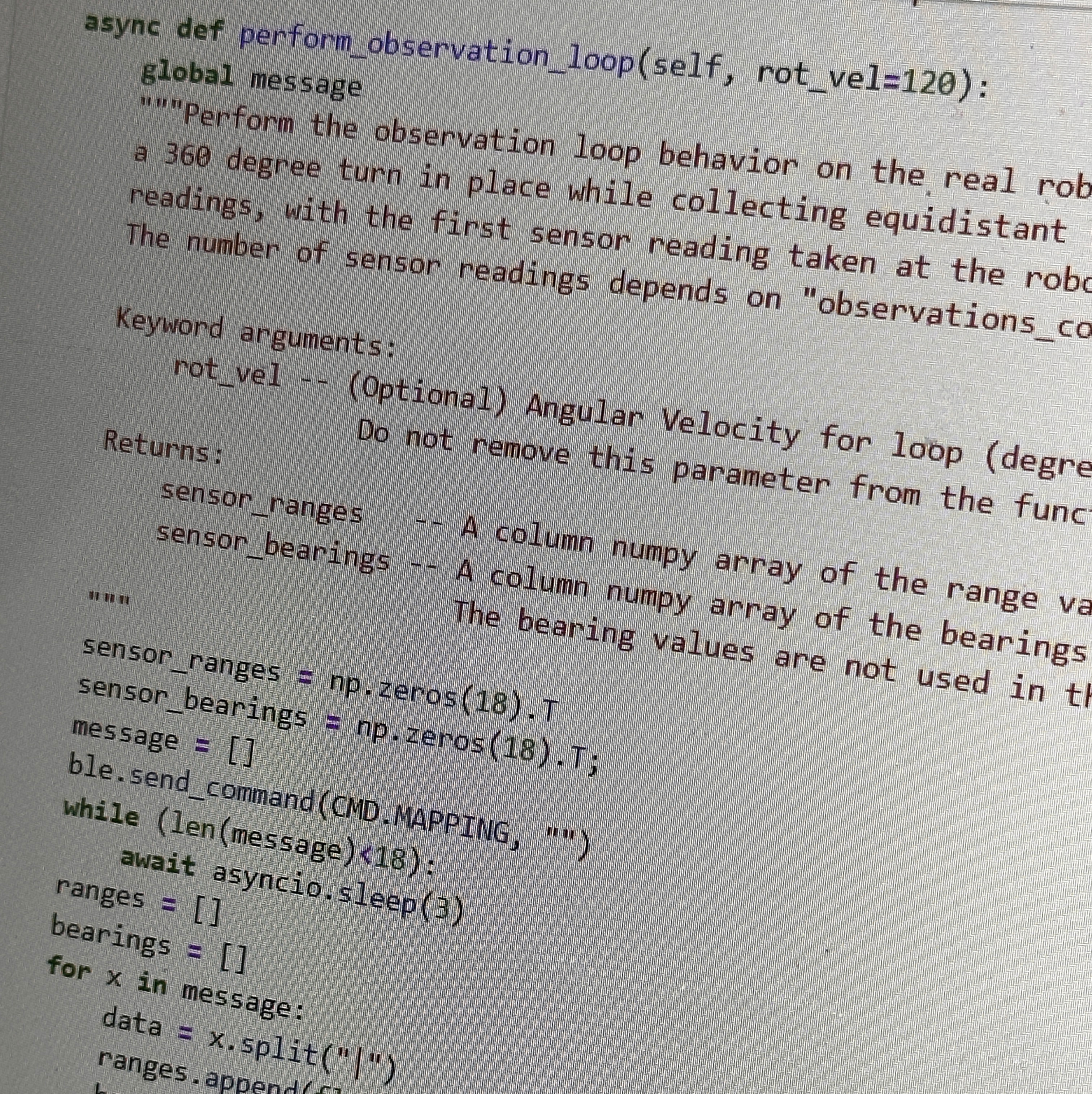

THE CODE

Now that I know what the Bayes filter actually is, I can try and implement on our simulated robot. The noisy odometry and sensor readings should mimic a real robot and give a good representation for the physical version without all the complications of reality.

compute_control

The compute_control function, helps to derive a ut that would result in the pose change seen in the inputs (from prev_pose to cur_pose). In lecture 17 there's an excellent slide illustrating just how we might do that.

As seen in the code above, I derived each expression for our three control inputs on the equations above. These equations represent any motion of our robot as two rotations and a linear transformation.

odom_motion_model

The odometry model above derives the robot's probability of state given an action. This takes the intended transformation or rotation, and estimates the result as a Gaussian distribution (with a sigma given by the task). In order to estimate the probability of the executed motions, we assume they are independent events and multiply the probabilties of the results against each other in order to achieve the final state's probability of the whole motion.

prediction_step

Now that we set up the building blocks for the predictions, we can put them together in the prediction step. Here we start by defining a control ut and then we have a strange but necessary 6 nested for loops. In order to update our bel_bar with our motion model, we need to apply it across every state, which means looping across all x grid locations (12), all y locations (9), and all angles (18) in our discretized space. In every grid location we then calculate a new belief based on the odometry model and the previous belief in that grid space. Our result will give us a new belief matrix (bel_bar) which isn't normalized but represents the change in certainty given our robots motion. I chose to normalize this intermediate belief, but this isn't necessary. As a sidenote, this operation in particular can become pretty computationally expensive very quickly. To counteract that, I don't even consider grid locations that have a probability of less than .001. This means the resulting filter is less accurate but far faster.

sensor_model

The above short script, much like the odometry model, is a helper method used to calculate the probability of a sensor reading given a particular state. It will output an 18 element list of the probability of each sensor measurement it gets by spinning around in a circle and gathering data. These will be useful in the final step of the Bayes filter.

update_step

Here's where all the previous work comes together. In the update step, the estimated belief from the prediction step (bel_bar) needs to be updated to account for the sensor readings in the robots new state. In order to do that, I need to loop through all the states (three more for loops like before) and multiply the probability of getting the current sensor readings with the current belief in the grid location. This will yield a final belief that can be normalized and used to represent the estimated position of the robot.

SIMULATION

The Bayes Algorithm shown above is an incredibly powerful tool that uses statistics and computation to derive a physcial location using often terribly estimated inputs. I'm happy to say I was able to get the simulation running the Filter in no time, thanks to Anya Prabowo's help and Professor Petersons lectures. Below are videos of the filter in action. Whiter grid locations indicate a higher belief and the second video shows a blue "belief" line that indicates an estimated position. Both videos show exceptional estimations of locations given the terrible odometry (in red). The areas where the Bayes filter doesn't work as well, are the areas where the map seems symmetrical (the center of the map). This is due to the way the sensors see the world. The robot becomes less

REFERENCES

This report would not have been possible without the incredible help and guidance from Anya Prabowo (her website here) and Professor Kirsten Peterson (her lecture slides here).