INTRODUCTION

For Lab 11, we take the theory from Lab 10 and we apply it to the real robot. Grid localization using a real robot means dealing with the imperfect world. For instance, the previous algorithm predicted position based on control inputs and an odometry model. There is no odometry model that we could come up with that isn't eggregiously incorrect. The solution is to skip the prediction step in the Bayes Filter and simply update based on the sensor measurements. Below is an image gathered from simulation of a virtual robot illustrating what the belief vs actual readings could look like.

IMPLEMENTATION

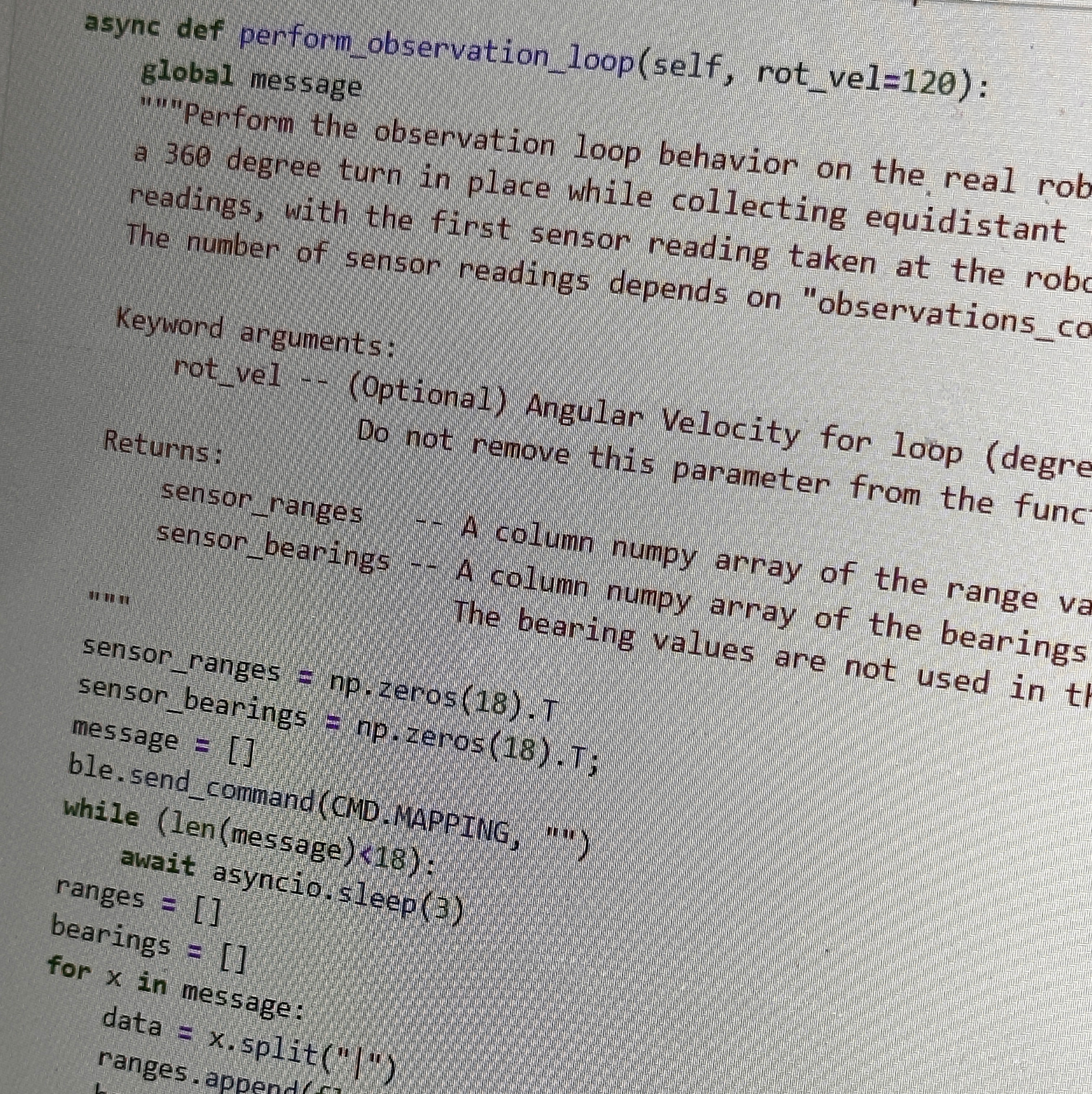

Gathering real world data is fairly straight forward and I've chosen to modify my code from Lab 9. Below is the Arduino code used to gather the 18 data points containing bearing and distance needed for the update step of the Bayes Filter. Below is the observation loop function used to replace the virtual robots observation loop from Lab 10

This code sends each bearing and distance pair as a single transmission through bluetooth. In order to use this data in python, we have to wait for each incoming point.

Using the asyncio.sleep(1) function, I was able to gather the points needed before inputting them into the Bayesian Filter.

RESULTS

The following are the resulting estimated positions from the Bayes filter on the marked positions on the map. The red dot is the real position and the blue dot represents the Bayes Filter estimate.

point (0,3)

point (-3,-2)

point (5,-3)

point (5,3)

DISCUSSION

Generally, the results were great! The estimated position never strayed too far away from the actual point as seen from the images above. One interesting observation of note was the occasional stray estimate due to the symmetry of the map and position of the robot. Looking at points (5,-3) and (0,3), they can seem somewhat similar depending on the orientation. Because the bot doesn't start with much of a definite initial position, it sometimes got confused and thought it was on the wrong point. Below is a video depicting the process: