INTRODUCTION

In labs 1 through 5 I built a robot that could debug and send commands through bluetooth. In labs 6 through 8 I implemented closed-loop PID control and a Kalman Filter. In lab 9 the robot mapped its surroundings. In labs 10 and 11 it localized and determined its position. To wrap up this semester, all of it will be integrated and used to navigate through the positions below.

STRATEGY

In order to get through all these points I could've just hard-coded a path that worked into the robot and I could've been done within a day but what's the fun in that? I've learned all these clever solutions to fundamental issues in robotics and I wasn't going to ignore them.

First, I had to decide what the main loop looked like and I decided the most elegant solution was to update all my sensors as fast as possible and constantly PID control while changing reference values for things like speed and angle in order to position myself. Once I moved myself into a position, the robot would localize to determine its position and move from its highest belief coordinate to some goal coordinate. This meant there were only four main functions that I had to use:

updateSensors()

PIDControl()

MAP()

MoveTo(CX,CY,GX,GY)

FUNCTIONS

updateSensors()

I've been the above code snippet since Lab 6 and it's been incredibly helpful. This function runs as soon as the bot connects through bluetooth and simply updates global variables that store data from the IMU and Time of Flight sensors. This function also integrates the gyroscope data in order to get the yaw orientation used for PIDControl.

PIDControl

The PIDControl function executes closed-loop control to correct the orientation about some reference angle variable (REFERENCE).

The value of the control input is then added to the baseSpeed which sets the forward or backward speed of the robot.

This function wouldn't always be on as that would cause the robot to often shake itself out of position. Instead, I

set a flag variable to "turn on" PIDControl through a BLE command right before executing the main Python loop that I'll go into more detail later on.

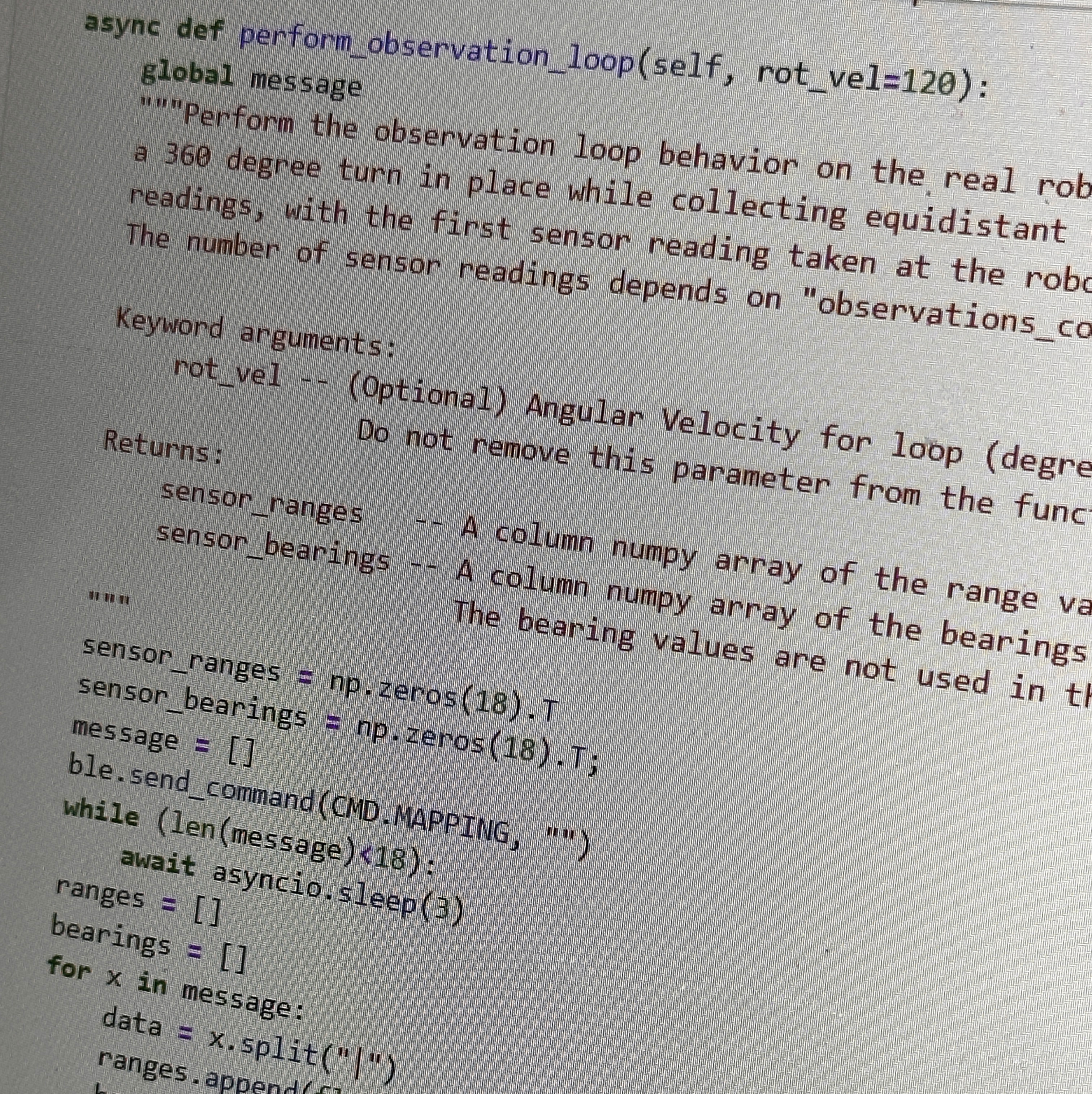

MAP()

I used this function back in lab 9 in order to map the arena. I made some slight modifications and made a helper

function Circle() for debugging purposes. By seperating the functions out, I was able to fine tune the PID gains

that would best let the robot spin on axis and with as little drift as possible. This function was executed as

part of the loc.perform_observation_loop function in the python code. This gathered data on the surroundings of the robot

to be used for localization.

MoveTo(CX,CY,GX,GY)

This was by far the most important function for this lab. This code took in current (X, Y) coordinates and translated it into reference values for angle and distance. This also reused the exact same Kalman Filter I implemented in Lab 7 to make the distance readings more accurate. The angle is determined from simple trigonometry and I determine the distance traveled by keeping track of the difference in KF distance readings at the start and end of the motion.

THE COMPASS

I will briefly mention a function that was scrapped as a result of thorough testing, the Compass()

function. The goal of this function was to use magnetometer data to find true north and use the compass heading of

robot to update the yaw in order to counteract the inevitable drifting of the yaw data from integrating the gyroscope.

The initial raw values gathered from magnetometer for each cardinal direction are as shown in the below plot:

By subtracting the offset of this circle from the X and Y readings and doing atan2(magY,magX), I was able to gather what the heading of the robot was. Testing this data on a tethered connection to the laptop (not using bluetooth) proved reliable, however, the readings were far less reliable once implemented on the robot. Below is a correctional function written to reset the zero to approximately the right of the map, which lies a little South of East.

The output of this function can be seen in the video below and the results were disappointing. To verify the issue was not my code, I store the heading that the magnetometer reads and send it over via bluetooth. Theoretically, if the magnetometer is reading correctly all the headings after the first command, should be the same. As seen below, this is not the case.

The data above is in the format "heading| magnetometer Y reading| magnetometer X reading"

The compass was unreliable at best. The heading was often off by 50 to 70 degrees and this gave horrible results. As a result, I instead opted to implement a BLE command that takes a correctional input to add to the yaw reading. Whenever the robot missed the intended goalpoint, the robot corrected itself by -5 degrees and when it hit the point it corrected positive 5 degrees. This corrected for the general drift of the robot and if the robot overcorrected itself and missed the waypoint, the -5 degree correction would eventually lead to a perfect zero.

PYTHON LOOP

Now that the fundamental Artemis functions were written, tested, and properly implemented the only thing left to do was write the basic loop that the python script would execute in order to navigate and self correct using localization. Below is the script I used:

The ground truth gridpoints plotted are green and only show up once the robot believes it has reached that point. The belief after localization is displayed on the graph as a blue dot. There is a zone of approximately 31 centimeters that determines if a waypoint has been hit. The first two points are hardcoded into the script. This was mostly to speed up execution time and average trial time. Every localization took approximately 30 to 40 seconds to complete so the less points we localized the better, since the map was a shared space. Additionally, travel from the first waypoint to the second involved Time of Flight sensor readings on a slanted wall while controlling orientation (and shaking because of it). This meant that more often then not, the distance data was inaccurate and the point was missed. To fix this, I added a waypoint perpindicular to the first two points in an effort to read off a horizontal wall. Below are two of the more than forty seperate attempts to navigate the path.

The first clip was a very near complete run. The robot got all the way to the seventh waypoint before unfortunately running out of battery. The second video depicts the final run that fully navigates the path. As seen in the video, the localization was incredibly powerful at determining the location and even after a completely wrong localization belief (caused by similarities in the structure of the surroundings around the robot), the robot could recover enough to complete the navigation. The solution I implemented was far more reliable than any open loop timed control algorithm due to the location feedback. I could've improved my solution by implementing some sort of search algorithm or obstacle avoidance routine but I felt it unnecessary due to the distance between waypoints.

CONCLUSION

This class has taught me so much about the fundamentals of robotics. The countless hours of debugging scripts or hardware, writing and rewriting code, properly organizing that code, and documenting everything have been invaluable to my robotics education. I feel confident the work I've done has made an everlasting impact on myself and I am so greatful for all the kind help I received from the TA's and friendly classmates. A special thank you is owed to Professor Kirsten Peterson for her excellent explanations of complex concepts like the Kalman Filter and the Bayes Filter, to Anya Prabowo and Jonathon Jaramillo for hosting so many helpful lab hours that debugged impossible code issues, and to Rafael Gottlieb for his partnership on this lab.